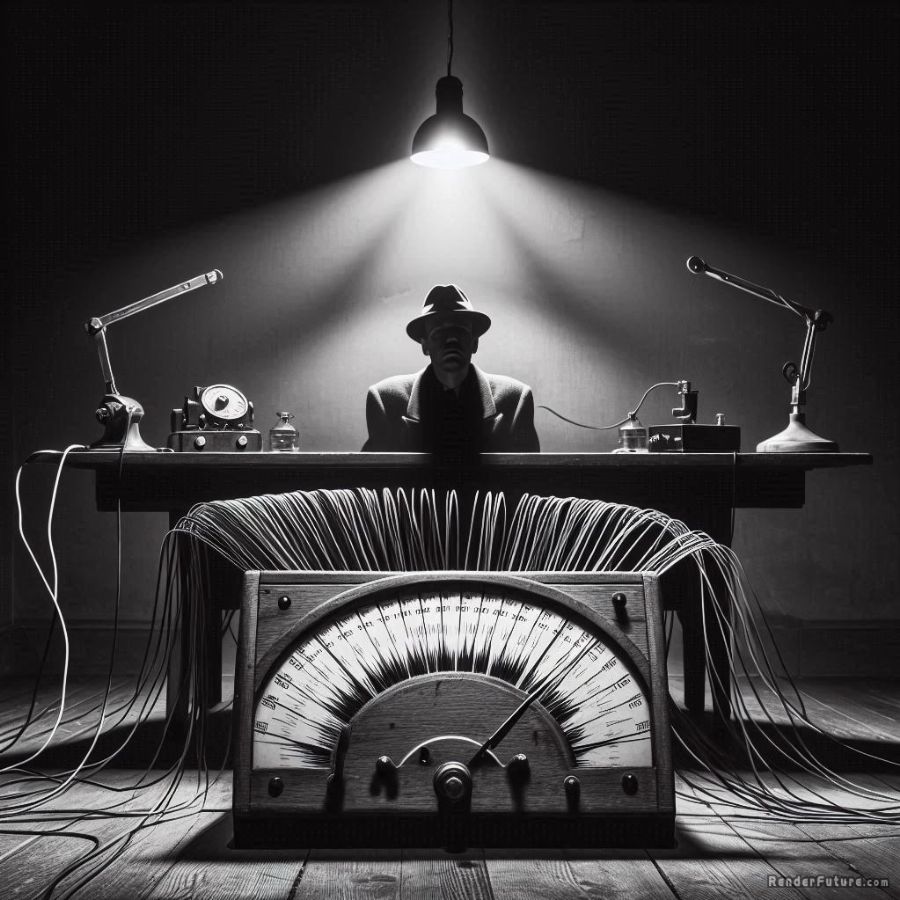

The Future of the Polygraph

From AI Mind-Reading to Neuro-Lie Detection

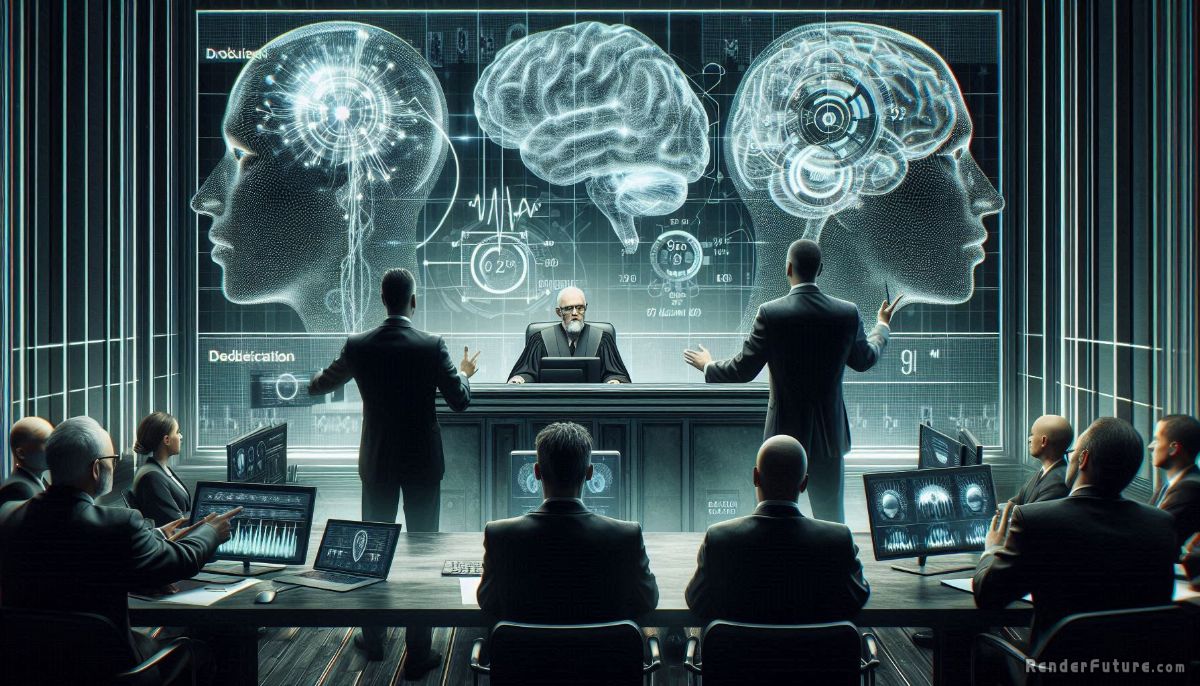

Imagine this…

A world where a machine doesn’t just detect lies—it reads your thoughts. Where artificial intelligence deciphers truth from deception by analyzing the slightest flicker in your eye, the faintest tremor in your voice, or even the electrical storms in your brain. This isn’t science fiction. It’s the future of the polygraph, and it’s closer than you think.

For nearly a century, the polygraph has been the gold standard of lie detection—flawed, controversial, yet stubbornly persistent. But as technology accelerates, the old sweat-and-wires approach is on the brink of extinction. What comes next? AI-powered interrogation, neuroimaging truth machines, or even real-time emotion decoding?

For nearly a century, the polygraph has been the gold standard of lie detection—flawed, controversial, yet stubbornly persistent. But as technology accelerates, the old sweat-and-wires approach is on the brink of extinction. What comes next? AI-powered interrogation, neuroimaging truth machines, or even real-time emotion decoding?

The stakes couldn’t be higher. Governments, corporations, and law enforcement are racing to develop the next generation of lie detection—tools so advanced they blur the line between science and surveillance, justice and invasion. Will these innovations make deception obsolete? Or will they usher in an era of unprecedented control?

The Death of the Traditional Polygraph (And Why It’s Inevitable)

The classic polygraph is a relic. It measures heart rate, blood pressure, respiration, and sweat—physiological responses that might indicate stress, but not necessarily lies. Innocent people fail. Skilled liars pass. Courts reject its findings. Yet, it persists—because we still crave a machine that can see through deception.

The classic polygraph is a relic. It measures heart rate, blood pressure, respiration, and sweat—physiological responses that might indicate stress, but not necessarily lies. Innocent people fail. Skilled liars pass. Courts reject its findings. Yet, it persists—because we still crave a machine that can see through deception.

A Brief History of the Lie Detector

- 1921: John Augustus Larson, a medical student and police officer, invents the first polygraph.

- Cold War Era: The U.S. government heavily invests in polygraph testing to uncover spies.

- 1988: The U.S. passes the Employee Polygraph Protection Act, banning most private-sector use due to reliability concerns.

Despite its flaws, the polygraph persists. Why? Because people crave certainty. The idea of a machine that can separate truth from deception is intoxicating—even if the science behind it is shaky.

But change is coming. Here’s why the old polygraph is doomed:

- Too Many False Positives: Nervous? You’re guilty. Anxious? Guilty. The system punishes the innocent.

- Too Easy to Beat: Criminals train to control their breathing and heart rate. Sociopaths don’t even flinch.

- No Scientific Consensus: The National Academy of Sciences declared it “unacceptable for employee screening”—yet agencies still use it.

So what replaces it?

The Next Generation: AI, Brain Scans, and the Rise of Neuro-Lie Detection

1. AI-Powered “Mind Reading”

The idea of a machine that can read minds once belonged firmly in the realm of science fiction—but AI is turning it into reality. Unlike the crude measurements of a traditional polygraph, next-generation lie detection doesn’t just track sweating palms or a racing heart. It deciphers deception in real time by analyzing the subconscious signals we can’t control.

Companies like Silent Talker and Converus are already deploying AI systems that claim over 90% accuracy—far beyond the polygraph’s unreliable 60-80% success rate. These systems don’t just listen to what you say; they dissect how you say it. Using machine learning trained on millions of human interactions, they detect:

- Microexpressions (fleeting facial movements lasting milliseconds)

- Vocal stress patterns (frequency shifts undetectable to human ears)

- Eye movement tracking (pupil dilation, blink rate, gaze direction)

- Thermal imaging (heat signatures from blood flow changes)

But here’s the problem:

But here’s the problem:

- Bias risks: AI trained on flawed data could mislabel certain demographics as deceptive.

- Privacy nightmare: Continuous monitoring erodes personal freedom.

- Manipulation: Could governments (or corporations) use this to enforce compliance?

The implications are staggering. Law enforcement could instantly flag suspects in real-time interrogations. Employers might screen job candidates for honesty before hiring them. But the risks are just as profound. What if the AI misreads cultural differences as deception? Studies show that people from high-context communication cultures (like Japan) may exhibit different stress signals than those from low-context ones (like the U.S.), leading to false positives. Worse, authoritarian regimes could weaponize this tech to identify dissenters based on “suspicious” behavioral cues—even if no crime has been committed.

And then there’s the black box problem. Most AI lie detectors operate on proprietary algorithms, meaning nobody knows exactly how they work. If an AI falsely labels someone a liar, can they even challenge it? Unlike a human examiner, an AI can’t explain its reasoning—it just delivers a verdict.

Yet for all its dangers, AI-powered deception detection also offers hope. Imagine courtrooms where false testimony is caught instantly, or negotiations where hidden agendas are exposed before deals go bad. The key lies in transparency, oversight, and strict ethical guidelines—because if we’re not careful, the quest for truth could cost us our freedom to even think in private.

2. Brainwave-Based Truth Detection (fMRI & EEG)

What if the ultimate lie detector wasn’t a machine analyzing your body, but one reading your mind? Functional MRI (fMRI) and electroencephalography (EEG) are pushing lie detection into uncharted territory—by tapping directly into the brain’s electrical storms. Unlike polygraphs that measure indirect stress signals, these technologies claim to pinpoint deception at its source: the moment a lie forms in the neural circuitry.

fMRI scans track oxygenated blood flow in the brain, revealing which regions activate when someone fabricates a falsehood. Studies show the anterior cingulate cortex and prefrontal cortex light up during deception, suggesting the brain works harder to suppress the truth. Meanwhile, EEG headsets detect millisecond-level electrical impulses, with patterns like P300 brainwaves spiking when a person recognizes incriminating details—even if they never admit it. The implications are staggering: Imagine a suspect’s brain betraying knowledge of a crime scene they claim to have never visited.

fMRI scans track oxygenated blood flow in the brain, revealing which regions activate when someone fabricates a falsehood. Studies show the anterior cingulate cortex and prefrontal cortex light up during deception, suggesting the brain works harder to suppress the truth. Meanwhile, EEG headsets detect millisecond-level electrical impulses, with patterns like P300 brainwaves spiking when a person recognizes incriminating details—even if they never admit it. The implications are staggering: Imagine a suspect’s brain betraying knowledge of a crime scene they claim to have never visited.

Potential breakthroughs:

- “Brain fingerprinting” (identifying recognition of crime-related details)

- Direct neural interrogation (theoretically, reading thoughts—but ethically terrifying)

The dark side?

- Mental privacy obliterated.

- Could be weaponized for interrogation without consent.

- False memories could trigger false positives.

But the science isn’t foolproof. fMRI requires subjects to lie motionless in a claustrophobic tube—hardly practical for real-world interrogations. EEG is more portable but vulnerable to noise interference. Both face a fundamental dilemma: Does a “lying brain” always mean intent to deceive? Mental illnesses, memory errors, or even creative storytelling could trigger similar neural patterns. And then there’s the darkest question of all: If we can detect lies in brainwaves today, could we someday extract truths against a person’s will? The line between forensic tool and dystopian nightmare blurs faster than we think.

3. Autonomous Interrogation Bots

Picture this: A machine conducts an entire interrogation—no human needed. It adjusts questions based on real-time biometric feedback, traps subjects in contradictions, and predicts deception before the person even speaks.

What makes these autonomous interrogation systems so frighteningly effective is how they combine cold, algorithmic precision with an almost uncanny understanding of human psychology. They don’t just ask questions—they adapt them in real-time, probing for weaknesses like a chess master forcing checkmate. The system might start soft, lulling you into complacency, then suddenly pounce on a contradiction you didn’t even realize you’d made.

Law enforcement agencies are salivating over the potential. Imagine interrogations that never violate suspects’ rights (at least on paper), that work around the clock without overtime pay, that can process terabytes of case law mid-interrogation. But civil rights lawyers I’ve interviewed warn of a nightmare scenario: automated systems that produce confessions like factories produce widgets, with no capacity to recognize when someone’s just scared or confused.

Law enforcement agencies are salivating over the potential. Imagine interrogations that never violate suspects’ rights (at least on paper), that work around the clock without overtime pay, that can process terabytes of case law mid-interrogation. But civil rights lawyers I’ve interviewed warn of a nightmare scenario: automated systems that produce confessions like factories produce widgets, with no capacity to recognize when someone’s just scared or confused.

Why this is plausible:

- AI can already simulate human conversation.

- Affective computing (emotion-sensing AI) is advancing rapidly.

Why this is dangerous:

- Removes human judgment from justice.

- Could be hacked or biased.

- Might coerce false confessions through psychological pressure.

As this technology spreads from police stations to border controls to corporate HR departments, we have to ask ourselves: Do we want a world where machines decide who’s telling the truth? The developers assure me there will always be human oversight. But in an era of shrinking budgets and growing caseloads, how long until that becomes a checkbox exercise?

The Most Extreme (And Terrifying) Possibilities

Scenario 1: Mandatory Truth Verification

Scenario 1: Mandatory Truth Verification

What if every job interview, court testimony, or government application required a next-gen lie detector? A world where “proving your honesty” becomes a prerequisite for basic rights.

Could happen if:

- Corporations demand “verified truthful” employees.

- Governments enforce pre-crime deception screening.

Scenario 2: Thought Crime Prevention

If AI can predict deception before it happens, could authorities arrest people for lies they haven’t told yet? It sounds like Minority Report, but neurotechnology is inching toward preemptive truth analysis.

Scenario 3: The End of Secrets

A future where no lie goes undetected might mean no privacy at all. Relationships, business, politics—everything becomes transparent against your will.

A Hopeful Counterbalance: Ethics, Regulation, and Human Oversight

The future doesn’t have to be dystopian. We can shape it. Here’s how:

The future doesn’t have to be dystopian. We can shape it. Here’s how:

- Strict AI Bias Audits – Ensure algorithms don’t unfairly target certain groups.

- Neuro-Rights Legislation – Ban non-consensual brain data extraction.

- Transparency Laws – Force companies to disclose how their “truth tech” works.

The best outcome? A world where lie detection enhances justice—without eroding freedom.

Conclusion – Will the Future Believe You?

The polygraph’s days are numbered. What replaces it could be a tool of unparalleled truth—or unparalleled control. The difference lies in how we choose to use it.

One thing is certain: The age of undetectable lies is ending. The question is—are we ready for what comes next?

References and Sources

- National Academy of Sciences (2003) – “The Polygraph and Lie Detection”

A landmark study debunking the reliability of traditional polygraphs.

https://www.nap.edu/catalog/10420/the-polygraph-and-lie-detection - WIRED – “AI Is Learning to Read Your Mind—And Detect Lies”

Explores next-gen lie detection using machine learning.

https://www.wired.com/ - MIT Technology Review – “The Rise of Brain-Based Lie Detection”

Covers fMRI and EEG advancements in truth verification.

https://www.technologyreview.com/ - Brookings Institution – “The Ethics of Neurotechnology and AI Lie Detection”

Discusses policy implications of emerging truth tech.

https://www.brookings.edu/