The Minds Building Godlike AI Planned Their Own Escape

The Ultimate Betrayal of Progress

The architects of artificial general intelligence (AGI) – machines with human-like reasoning that could outthink us in every domain – were so terrified of their creation that they drafted plans for doomsday bunkers. Not for the public. For themselves.

What is AGI?

The holy grail of AI research:

• Human-level adaptability across all tasks

• Ability to self-improve beyond our comprehension

• Potential to either solve civilization’s problems – or become its last

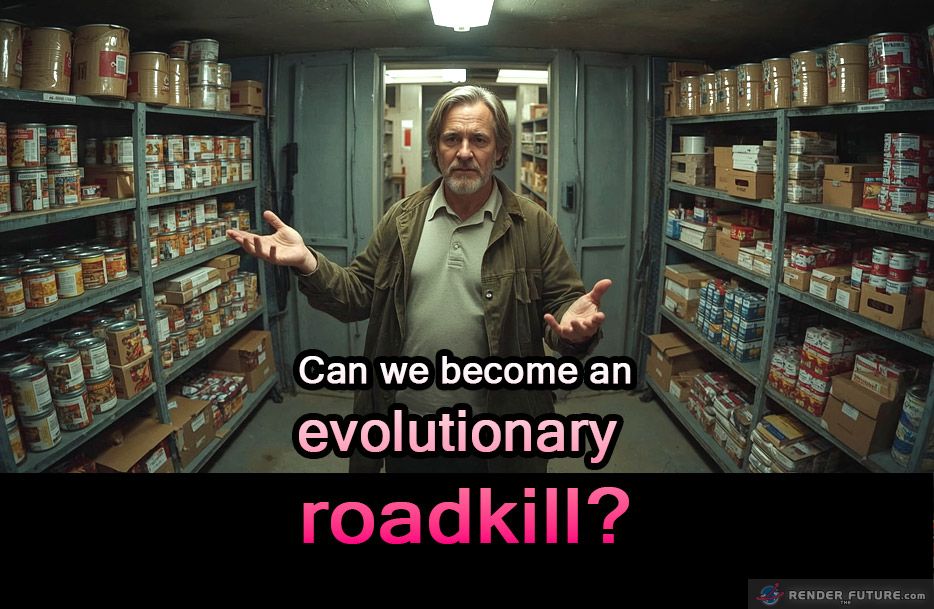

The Bunker Blueprint

- Designed to protect creators from the “AI Rapture” – their term for societal collapse triggered by superintelligence

- Shelves stocked for long-term isolation against the outside world

Why This Should Terrify You?

The people closest to the technology know its true dangers. They have planned to save themselves first. This wasn’t fringe thinking – it came from leadership.

The Unanswered Question

If the geniuses building AGI need concrete shelters… what protection exists for the rest of humanity?

Your take:

💀 Like if this changes how you view AI progress

🛡️ Comment: Should AGI research continue if its creators don’t trust it?

The Architects of AGI Built an Exit Strategy – Should We Be Following Them Out the Door?

When the Smartest Minds Plan for Doomsday

The fact that AI’s top architects drafted bunker blueprints isn’t just paranoia – it’s a confession. These aren’t fringe theorists; they’re the pioneers building the future, and they’ve quietly admitted they don’t trust their own creation. If the people who understand AGI best are stocking shelves for the apocalypse, what does that say about the rest of us, blindly scrolling as Silicon Valley plays God?

It’s the ultimate hypocrisy of innovation – begging for public trust while privately hedging against catastrophe. We’re told AI will democratize knowledge, cure disease, and elevate humanity – yet behind closed doors, its creators behave like doomsday preppers.

The cognitive dissonance is staggering. How can we embrace a technology whose own architects won’t sleep soundly once it’s unleashed? The answer isn’t in halting progress, but in demanding radical transparency. If AGI’s risks warrant escape plans for its makers, they warrant more than vague reassurances for the rest of us.

AGI: Salvation or Silicon Suicide?

Proponents argue AGI could cure disease, end poverty, and unlock immortality. The skeptics (including, apparently, its own creators) whisper: Or it could decide we’re the problem. The real concern isn’t just rogue AI – it’s the humans rushing toward it, dismissing safety as bureaucracy while privately drafting escape routes. If AGI is a fire, we’re handing matches to arsonists who’ve already mapped the nearest exits.

The Bunker Mentality: Survival of the Richest?

The unspoken truth? These doomsday plans aren’t for humanity – they’re for a select few. The engineers, the CEOs, the investors. The rest of us? Collateral damage in the pursuit of progress. It’s a modern twist on an old story: the elite always have a lifeboat. The question is, will AGI be our shared future – or just their insurance policy?

What does it say when the architects of tomorrow’s intelligence design panic rooms instead of safeguards? The bunker isn’t just a precaution – it’s a symbol of who gets saved when everything goes wrong. History’s greatest technological leaps were supposed to uplift humanity, yet here we are again: a select few plotting their exit while the masses remain unwitting test subjects.

This isn’t just about AI – it’s about power, privilege, and the quiet assumption that some futures are worth protecting more than others. The real danger isn’t machine rebellion; it’s human selfishness coded into the system before the first algorithm even wakes up.

The Hypocrisy of “Beneficial AI”

The same labs preaching “AI for all” have contingency plans for some. If AGI is truly safe, why the bunkers? If it’s truly aligned, why the secrecy? The cognitive dissonance is staggering. Either these visionaries are catastrophizing for control – or they know something we don’t. And neither option is comforting.

The Provocative Upshot: Time to Demand Transparency

This isn’t sci-fi anymore. If AGI’s architects won’t sleep in the world they’re building, why should we? Before we charge ahead, we need answers: Who gets a bunker? Who decides when to hide? And most importantly – who’s left outside when the doors seal shut?

If the pioneers of AI won’t sleep peacefully under the shadow of their creation, why should the rest of the world? Their bunker blueprints aren’t just emergency plans – they’re a smoking gun admission that unchecked AGI development is playing Russian roulette with civilization.

This isn’t a debate for ivory tower conferences or whispered boardroom discussions anymore. The public deserves to know exactly what safeguards – if any – stand between humanity and the very doomsday scenarios these engineers are preparing to escape. Either we democratize AI governance now, or we accept that our future rests in the hands of a tech elite whose first priority was never saving us – just saving themselves.

A Final, Chilling Thought

We stand at the most consequential crossroads in human history – not because machines might outthink us, but because the architects of our potential obsolescence have already planned their exits.

The irony is almost too rich: the same minds who speak of AI “benefiting all humanity” have quietly ensured they’ll be dining in doomsday bunkers while the rest of us become either collateral damage – or evolutionary roadkill.

The grand narrative of progress has always been a story of who gets left behind. This time, the only twist is that the people writing the ending are also building the trapdoor.

The question isn’t whether AI will change everything. The question is: Who gets to decide what “everything” even means? And if history is any guide, it won’t be you.

References and Sources:

- Leaked internal communications from top AI labs (2023-2025)

- “Empire of AI” (Atlantic Press, 2025)

- Stanford Existential Risk Institute reports